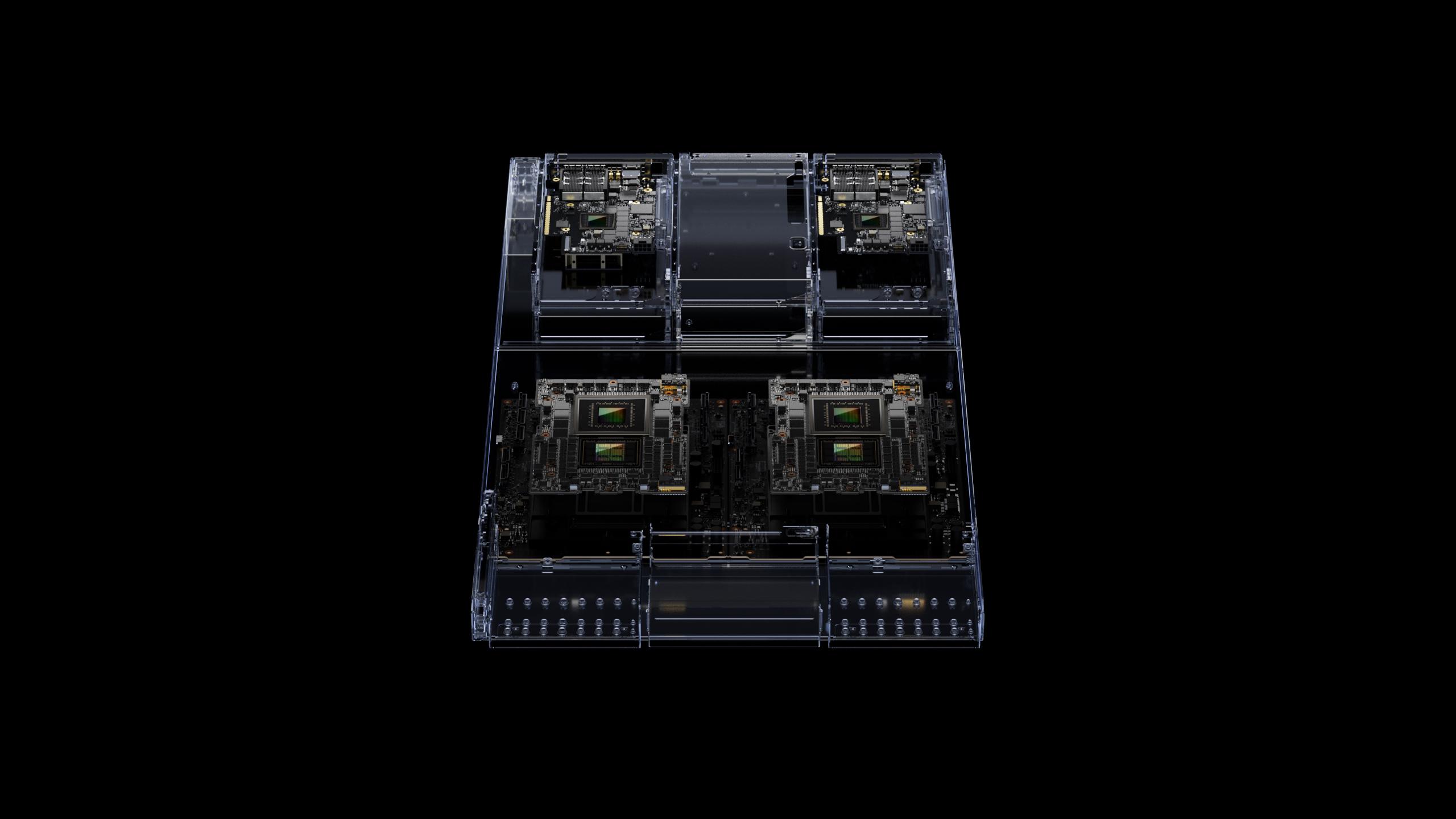

Nvidia, the leading provider of artificial intelligence (AI) chips, has announced a new, more powerful AI chip that is scheduled to be released in the second quarter of 2024. The new chip, called the GH200, will be based on the Grace Hopper Superchip architecture and will offer triple the memory capacity of the current generation of Nvidia AI chips.

The GH200 is designed for generative AI workloads such as large language models (LLMs) and recommender systems. LLMs are AI models that can generate text, translate languages, and write different kinds of creative content. Recommender systems are AI systems that suggest products or services to users based on their past behavior.

The GH200’s increased memory capacity will allow it to train larger LLMs and recommender systems. This will lead to improved performance in a variety of AI applications, such as natural language processing, computer vision, and robotics.

Nvidia is also touting the GH200’s energy efficiency. The company claims that the GH200 will be 30% more energy efficient than the current generation of Nvidia AI chips. This will make it more cost-effective to deploy the GH200 in data centers.

The GH200 is a significant development in the AI chip market. It is the most powerful AI chip that Nvidia has ever announced, and it is designed for the most demanding AI workloads. The GH200 is likely to accelerate the adoption of AI in a variety of industries, including healthcare, finance, and retail.

In addition to the GH200, Nvidia also announced a new server platform that is designed to support the chip. The new platform, called the Grace Hopper SuperPOD, is a modular system that can be scaled to meet the needs of any AI workload. The Grace Hopper SuperPOD is scheduled to be released in the first quarter of 2024.

The announcement of the GH200 and the Grace Hopper SuperPOD is a sign that Nvidia is committed to continuing to lead the AI chip market. The company is investing heavily in research and development and is constantly developing new technologies to power the next generation of AI applications.